Quantifying and Combining Crypto Alphas#

In this article, I’ll take some crypto stat arb features from the previous chapter and show you how you might quantify their strength and decay characteristics and then combine them into a trading signal.

Ultimately, combining signals is about making sensible decisions about how you want each signal to contribute to the calculation of portfolio weights. And to make those decisions, you need to understand your signals:

Do they need to be scaled or transformed in some way to be useful?

How strongly are they predictive of forward returns?

How quickly does this predictive power decay?

How noisy is the signal? Does it bounce around all over the place or is it relatively stable?

How correlated are your signals to one another?

One you understand these questions, you can start to consider how to combine your signals. And there are no right or wrong answers, just different paths through the various trade-offs depending on your goals and constraints. When considering costs, the questions around signal decay and stability become critical.

And you might combine your signals using simple heuristics (equal weight, equal volatility contribution), or by selecting coefficients based on your understanding of how the signals behave. You can also use explicit optimisation techniques, but you don’t have to.

In this article, we’ll combine some cross-sectional signals with a time-series one, which has the effect of tipping the portfolio net long or short - which may or may not be acceptable depending on your constraints.

I’ll include all the code and link to the data so that you can follow along if you like.

Let’s get to it.

First, load some libraries and set our session options:

# session options

options(repr.plot.width = 14, repr.plot.height=7, warn = -1)

library(tidyverse)

library(tibbletime)

library(roll)

library(patchwork)

# chart options

theme_set(theme_bw())

theme_update(text = element_text(size = 20))

── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.4

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ ggplot2 3.4.4 ✔ tibble 3.2.1

✔ lubridate 1.9.3 ✔ tidyr 1.3.1

✔ purrr 1.0.2

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors

Attaching package: 'tibbletime'

The following object is masked from 'package:stats':

filter

Next load some data. This dataset includes daily price, volume, and funding data for crypto perpetual futures contracts traded on Binance since late 2019.

You can get the data here.

perps <- read_csv("https://github.com/Robot-Wealth/r-quant-recipes/raw/master/quantifying-combining-alphas/binance_perp_daily.csv")

head(perps)

Rows: 187251 Columns: 11

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (1): ticker

dbl (9): open, high, low, close, dollar_volume, num_trades, taker_buy_volum...

date (1): date

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

| ticker | date | open | high | low | close | dollar_volume | num_trades | taker_buy_volume | taker_buy_quote_volumne | funding_rate |

|---|---|---|---|---|---|---|---|---|---|---|

| <chr> | <date> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> |

| BTCUSDT | 2019-09-11 | 10172.13 | 10293.11 | 9884.31 | 9991.84 | 85955369 | 10928 | 5169.153 | 52110075 | -3e-04 |

| BTCUSDT | 2019-09-12 | 9992.18 | 10365.15 | 9934.11 | 10326.58 | 157223498 | 19384 | 11822.980 | 119810012 | -3e-04 |

| BTCUSDT | 2019-09-13 | 10327.25 | 10450.13 | 10239.42 | 10296.57 | 189055129 | 25370 | 9198.551 | 94983470 | -3e-04 |

| BTCUSDT | 2019-09-14 | 10294.81 | 10396.40 | 10153.51 | 10358.00 | 206031349 | 31494 | 9761.462 | 100482121 | -3e-04 |

| BTCUSDT | 2019-09-15 | 10355.61 | 10419.97 | 10024.81 | 10306.37 | 211326874 | 27512 | 7418.716 | 76577710 | -3e-04 |

| BTCUSDT | 2019-09-16 | 10306.79 | 10353.81 | 10115.00 | 10120.07 | 208211376 | 29030 | 7564.376 | 77673986 | -3e-04 |

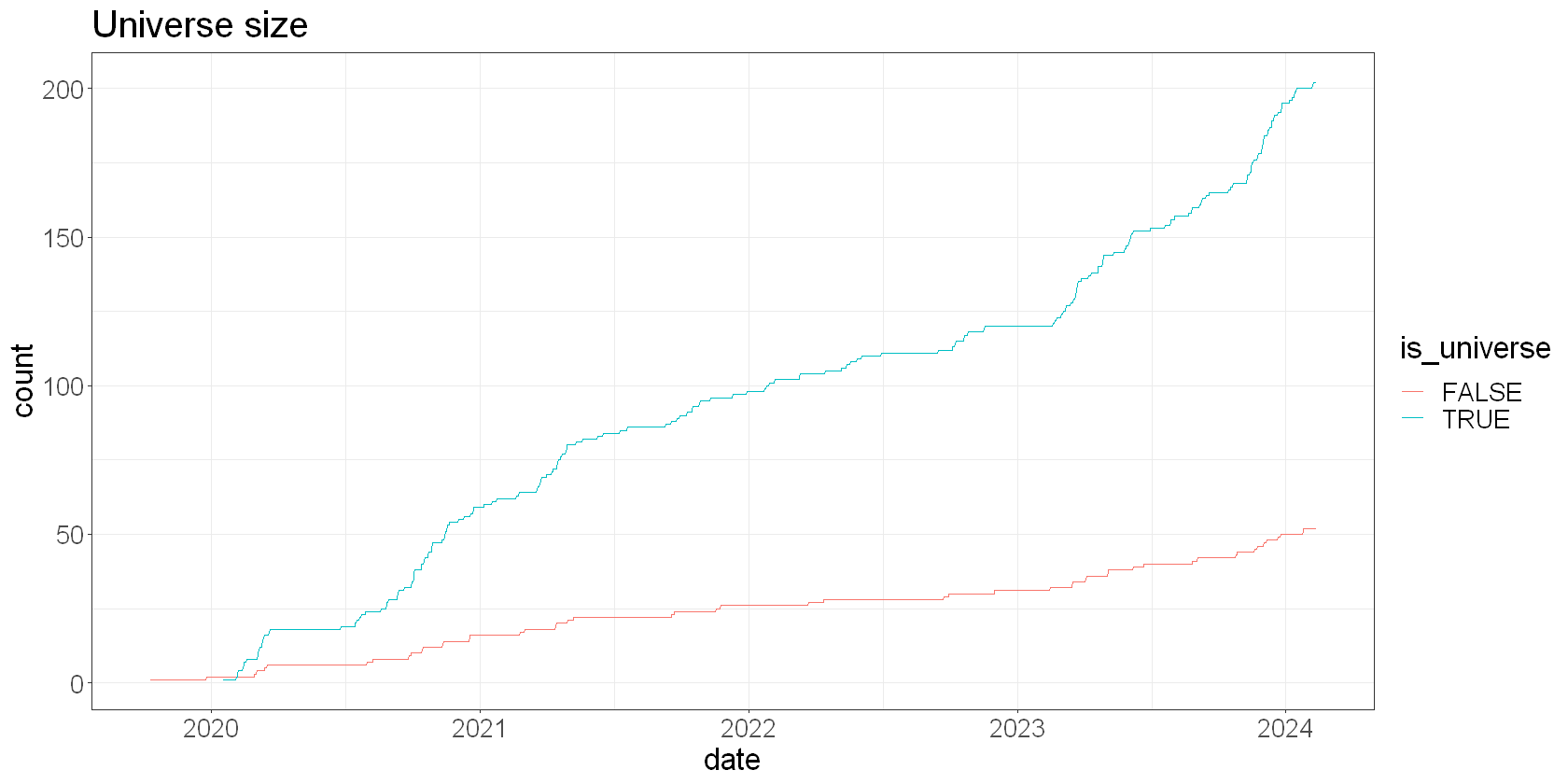

First, let’s constrain our universe by just removing the bottom 20% by trailing 30-day dollar volume.

How many perps are in our universe?

universe <- perps %>%

group_by(ticker) %>%

mutate(trail_volume = roll_mean(dollar_volume, 30)) %>%

# also calculate returns for later

mutate(

total_fwd_return_simple = dplyr::lead(funding_rate, 1) + (dplyr::lead(close, 1) - close)/close, # next day's simple return

total_fwd_return_simple_2 = dplyr::lead(total_fwd_return_simple, 1), # next day again simple return

total_fwd_return_log = log(1 + total_fwd_return_simple)

) %>%

na.omit() %>%

group_by(date) %>%

mutate(

volume_decile = ntile(trail_volume, 10),

is_universe = volume_decile >= 3

)

universe %>%

group_by(date, is_universe) %>%

summarize(count = n(), .groups = "drop") %>%

ggplot(aes(x=date, y=count, color = is_universe)) +

geom_line() +

labs(

title = 'Universe size'

)

Some simple features#

We’ll look at three very simple features implied by our brainstorming session.

Breakout - closeness to recent 20 day highs: (9.5 = new highs today / -9.5 = new highs 20 days ago)

Carry - funding over the last 24 hours

Momentum - how much has price changed over the last 10 days

My intent is to use the carry and momentum features as cross-sectional predictors of returns. That is, does relative carry/momentum predict out/under-performance?

And I’ll use the breakout feature as a time-series overlay. I hope to use it to predict forward returns for each asset in the time-series, not in the cross-section.

This is mostly to demonstrate how to combine cross-sectional and time-series features into a single long-short strategy. Adding time-series features to a long-short stat arb basket has the effect of making the basket net long or net short rather than delta neutral, so you may not want to do it, depending on your constraints.

First we calculate the raw features and lag them by one observation:

rolling_days_since_high_20 <- purrr::possibly(

tibbletime::rollify(

function(x) {

idx_of_high <- which.max(x)

days_since_high <- length(x) - idx_of_high

days_since_high

},

window = 20, na_value = NA),

otherwise = NA

)

features <- universe %>%

group_by(ticker) %>%

arrange(date) %>%

mutate(

# we won't lag features here because we're using forward returns

breakout = 9.5 - rolling_days_since_high_20(close), # puts this feature on a scale -9.5 to +9.5 (9.5 = high was today)

momo = close - lag(close, 10)/close,

carry = funding_rate

) %>%

ungroup() %>%

na.omit()

head(features)

| ticker | date | open | high | low | close | dollar_volume | num_trades | taker_buy_volume | taker_buy_quote_volumne | funding_rate | trail_volume | total_fwd_return_simple | total_fwd_return_simple_2 | total_fwd_return_log | volume_decile | is_universe | breakout | momo | carry |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| <chr> | <date> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <int> | <lgl> | <dbl> | <dbl> | <dbl> |

| BTCUSDT | 2019-10-29 | 9389.98 | 9569.10 | 9156.03 | 9354.63 | 1207496201 | 238440 | 63093.62 | 592624390 | -0.00117130 | 640692193 | -0.02797496 | 0.011791818 | -0.02837371 | 1 | FALSE | 7.5 | 9353.776 | -0.00117130 |

| BTCUSDT | 2019-10-30 | 9352.65 | 9492.86 | 8975.72 | 9102.91 | 1089606006 | 267844 | 58062.12 | 537610627 | -0.00106636 | 660931006 | 0.01179182 | -0.015317479 | 0.01172284 | 1 | FALSE | 6.5 | 9102.029 | -0.00106636 |

| BTCUSDT | 2019-10-31 | 9103.86 | 9438.64 | 8933.00 | 9218.70 | 851802094 | 229216 | 46234.79 | 423883668 | -0.00092829 | 680429873 | -0.01531748 | 0.025637957 | -0.01543600 | 1 | FALSE | 5.5 | 9217.811 | -0.00092829 |

| BTCUSDT | 2019-11-01 | 9218.70 | 9280.00 | 9011.00 | 9084.37 | 816001159 | 233091 | 43506.69 | 398347973 | -0.00074601 | 698120233 | 0.02563796 | -0.015217382 | 0.02531482 | 1 | FALSE | 4.5 | 9083.466 | -0.00074601 |

| BTCUSDT | 2019-11-02 | 9084.37 | 9375.00 | 9050.27 | 9320.00 | 653539543 | 204338 | 35617.67 | 328788833 | -0.00030000 | 710903610 | -0.01521738 | 0.011864661 | -0.01533435 | 1 | FALSE | 3.5 | 9319.204 | -0.00030000 |

| BTCUSDT | 2019-11-03 | 9319.00 | 9366.69 | 9105.00 | 9180.97 | 609237501 | 219662 | 31698.32 | 292888566 | -0.00030000 | 722483703 | 0.01186466 | 0.008212094 | 0.01179483 | 1 | FALSE | 2.5 | 9180.160 | -0.00030000 |

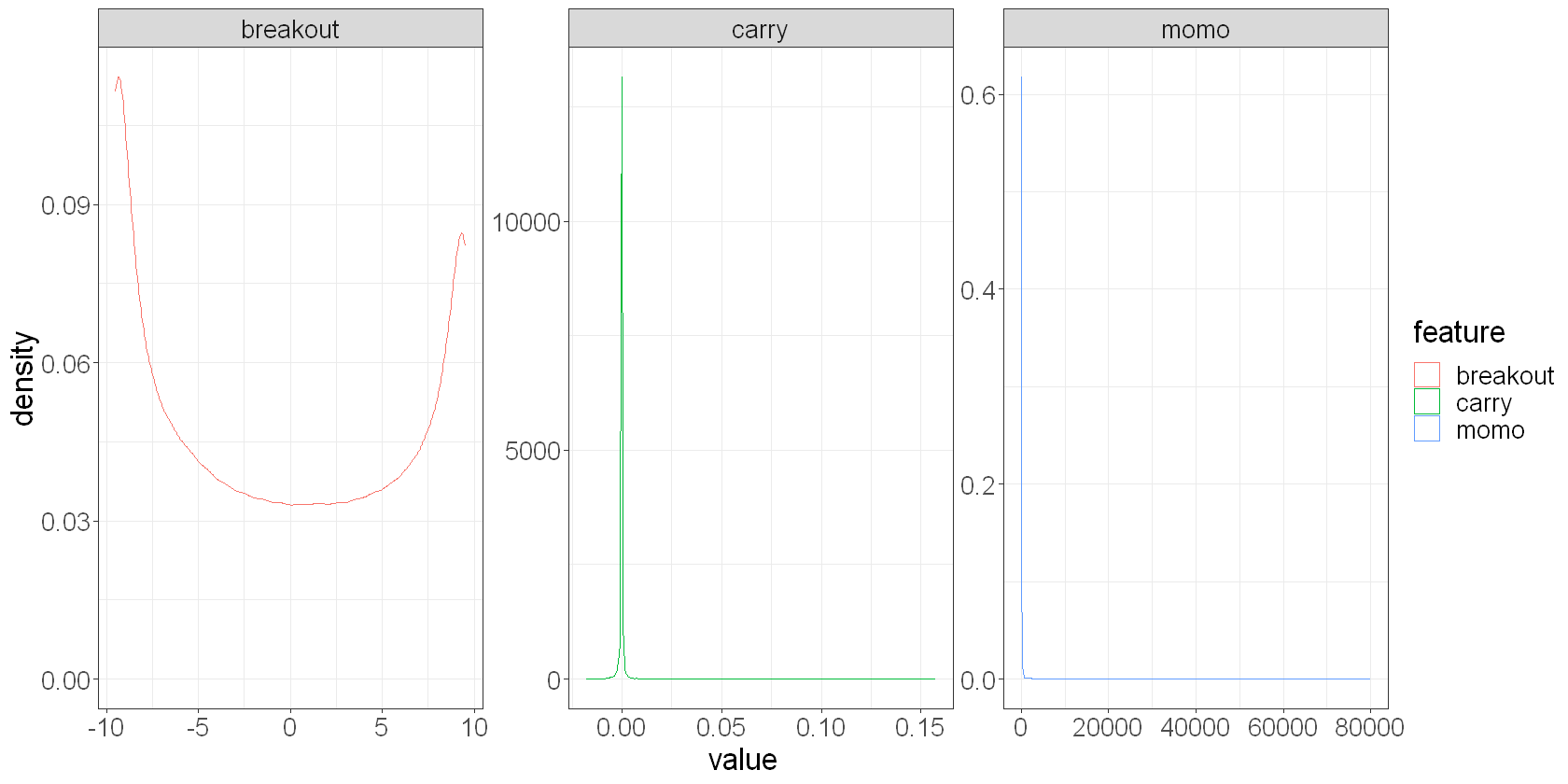

Exploring our features#

The first thing you’ll generally want to do is get a handle on the characteristics of our raw features. How are they distributed? Do their distributions imply the need for scaling?

features %>%

filter(is_universe) %>%

pivot_longer(c(breakout, momo, carry), names_to = "feature") %>%

ggplot(aes(x = value, colour = feature)) +

geom_density() +

facet_wrap(~feature, scales = "free")

We see that the breakout feature is not too badly behaved. Carry and momentum both have significant tails however, so we’re going to want to scale those features somehow.

We’ll scale them cross sectionally by:

calculating a zscore: the number of standard deviations each asset is away from the daily mean

sorting into deciles: sort the assets into 10 equal buckets by the value of the feature each day - this collapses the feature value down to a rank for each asset each day (multiple assets will have the same rank).

We’ll also calculate relative returns so that we can explore our features’ predictive utility in the time-series and the cross-section.

features_scaled <- features %>%

filter(is_universe) %>%

group_by(date) %>%

mutate(

demeaned_fwd_returns = total_fwd_return_simple - mean(total_fwd_return_simple),

zscore_carry = (carry - mean(carry, na.rm = TRUE)) / sd(carry, na.rm = TRUE),

decile_carry = ntile(carry, 10),

zscore_momo = (momo - mean(momo, na.rm = TRUE)) / sd(momo, na.rm = TRUE),

decile_momo = ntile(momo, 10),

) %>%

na.omit() %>%

ungroup()

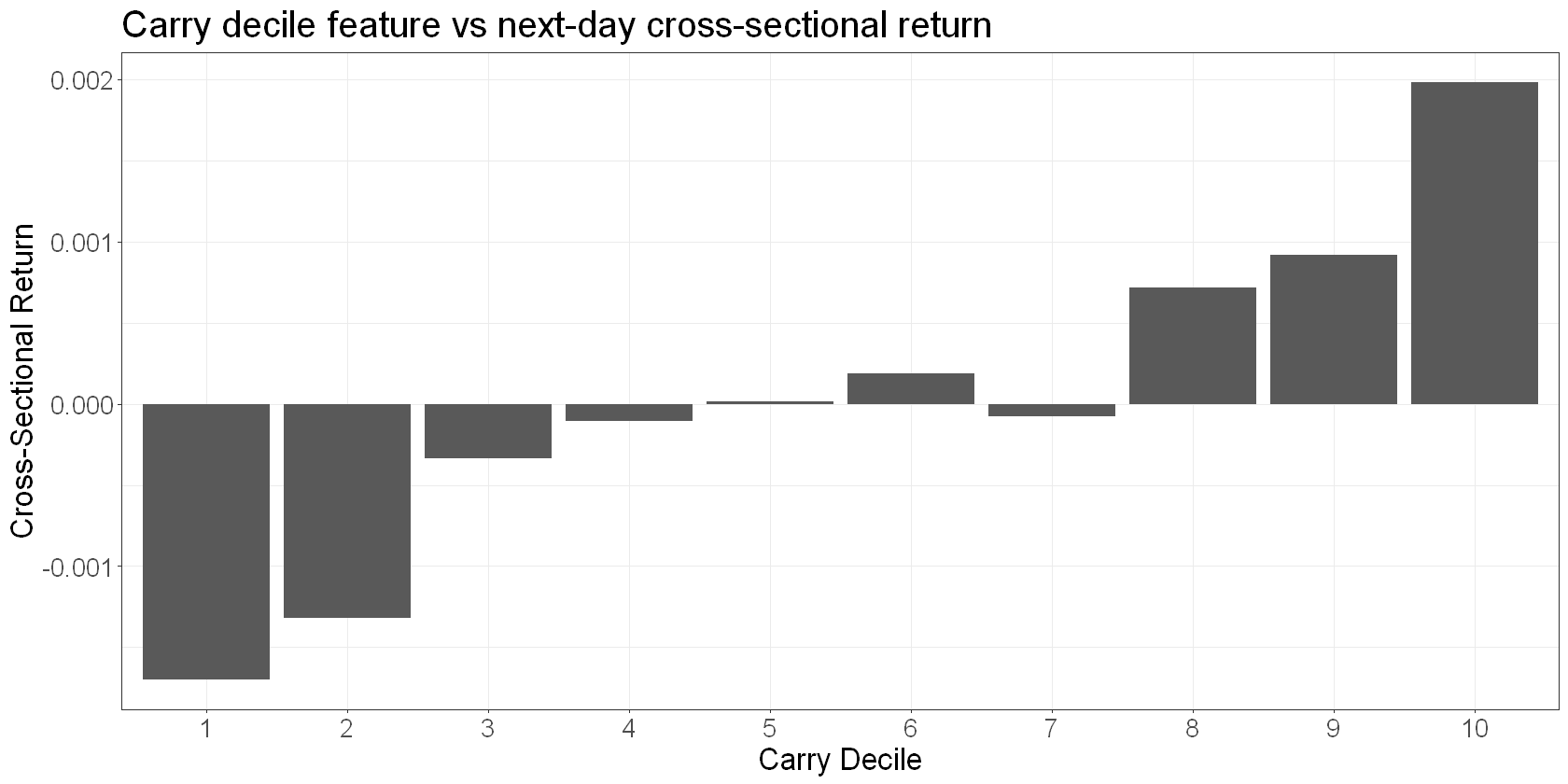

Next we would plot our features against next day returns. For example, here’s a factor plot of the decile_carry feature against next day relative returns:

features_scaled %>%

group_by(decile_carry) %>%

summarise(

mean_return = mean(mean(demeaned_fwd_returns))

) %>%

ggplot(aes(x = factor(decile_carry), y = mean_return)) +

geom_bar(stat = "identity") +

labs(

x = "Carry Decile",

y = "Cross-Sectional Return",

title = "Carry decile feature vs next-day cross-sectional return"

)

We see that it’s a good discriminator of the sign of next day returns, and the relationship is noisily linear, but much stronger in the tails.

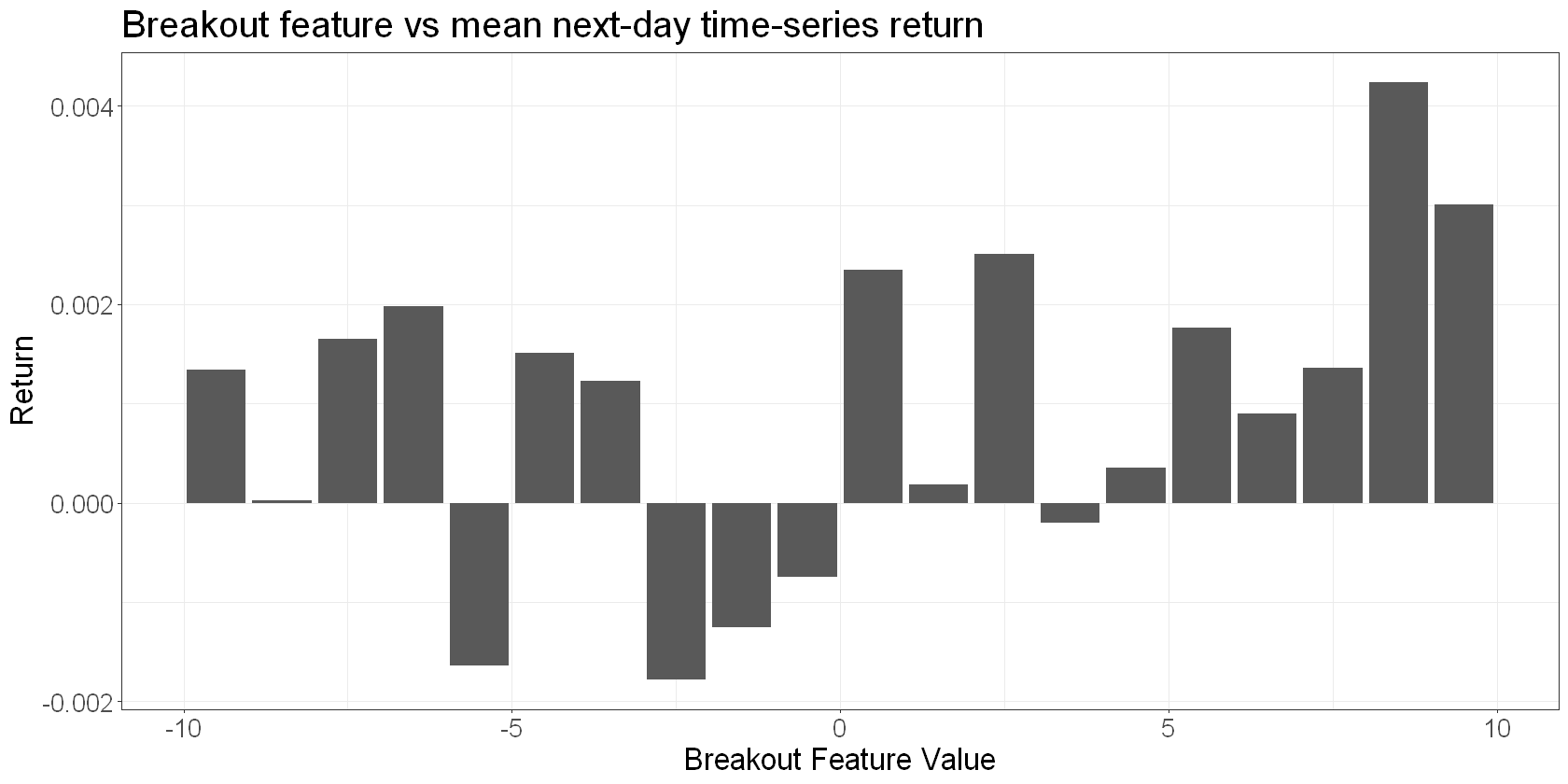

And here’s a plot of the breakout feature against mean next day time-series returns:

features_scaled %>%

group_by(breakout) %>%

summarise(

mean_return = mean(total_fwd_return_simple)

) %>%

ggplot(aes(x = breakout, y = mean_return)) +

geom_bar(stat = "identity") +

labs(

x = "Breakout Feature Value",

y = "Return",

title = "Breakout feature vs mean next-day time-series return"

)

We see a pretty noisy and non-linear relationship, but high values of the breakout feature are generally associated with higher returns.

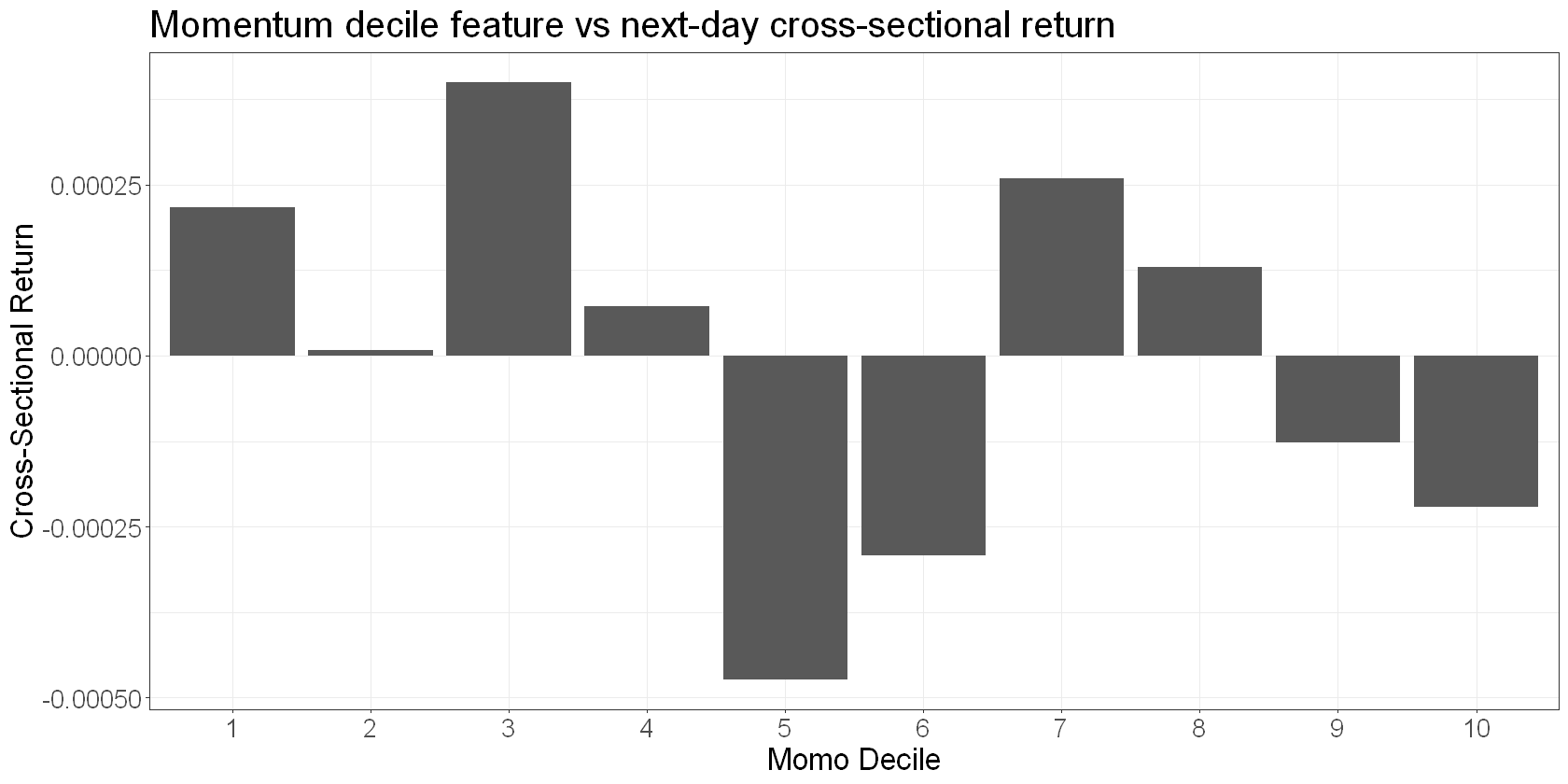

Here’s the momentum factor:

features_scaled %>%

group_by(decile_momo) %>%

summarise(

mean_return = mean(demeaned_fwd_returns)

) %>%

ggplot(aes(x = factor(decile_momo), y = mean_return)) +

geom_bar(stat = "identity") +

labs(

x = "Momo Decile",

y = "Cross-Sectional Return",

title = "Momentum decile feature vs next-day cross-sectional return"

)

We see a very noisy relationship between 10-day cross-sectional momentum and next day relative returns. Interestingly, we see a roughly negative relationship, implying a slight mean-reversion effect rather than momentum.

More work to do#

There are all sorts of ways you can view your features.

Here, we’ve only looked at the mean returns to each discrete feature value, which is useful because by aggregating returns, we get a clear view of the on-average relationships. On the downside however, this approach hides nearly all of the variance. So in practice you’d also rely on other tools - for instance scatterplots and timeseries plots of cumulative next day returns to the feature.

We’ve also not looked at turnover implied by our signals. This is crucial when you start to consider costs.

You’d also want to look at look at the stability of the factors’ performance over time. For example, you may want to down-weight factors that outperformed in the past but have levelled off recently. What we’ve done here hides that level of detail.

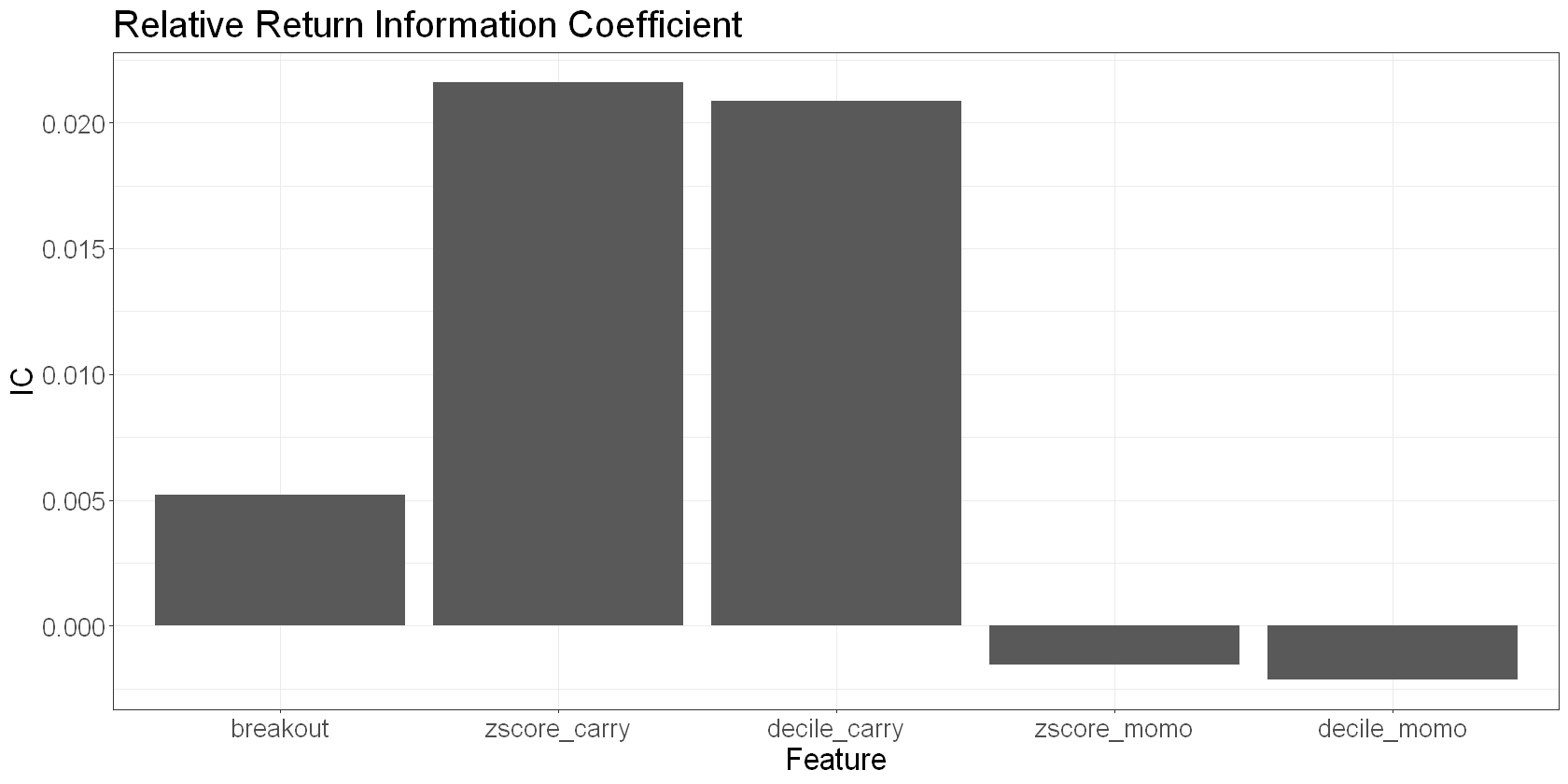

Information coefficient#

The information coefficient is simply the correlation of the feature with next day returns. It provides a single metric for quantifying the strength of the signal. We can calculate it for time-series returns and cross-sectional returns:

features_scaled %>%

pivot_longer(c(breakout, zscore_carry, zscore_momo, decile_carry, decile_momo), names_to = "feature") %>%

group_by(feature) %>%

summarize(IC = cor(value, demeaned_fwd_returns)) %>%

ggplot(aes(x = factor(feature, levels = c('breakout', 'zscore_carry', 'decile_carry', 'zscore_momo', 'decile_momo')), y = IC)) +

geom_bar(stat = "identity") +

labs(

x = "Feature",

y = "IC",

title = "Relative Return Information Coefficient"

)

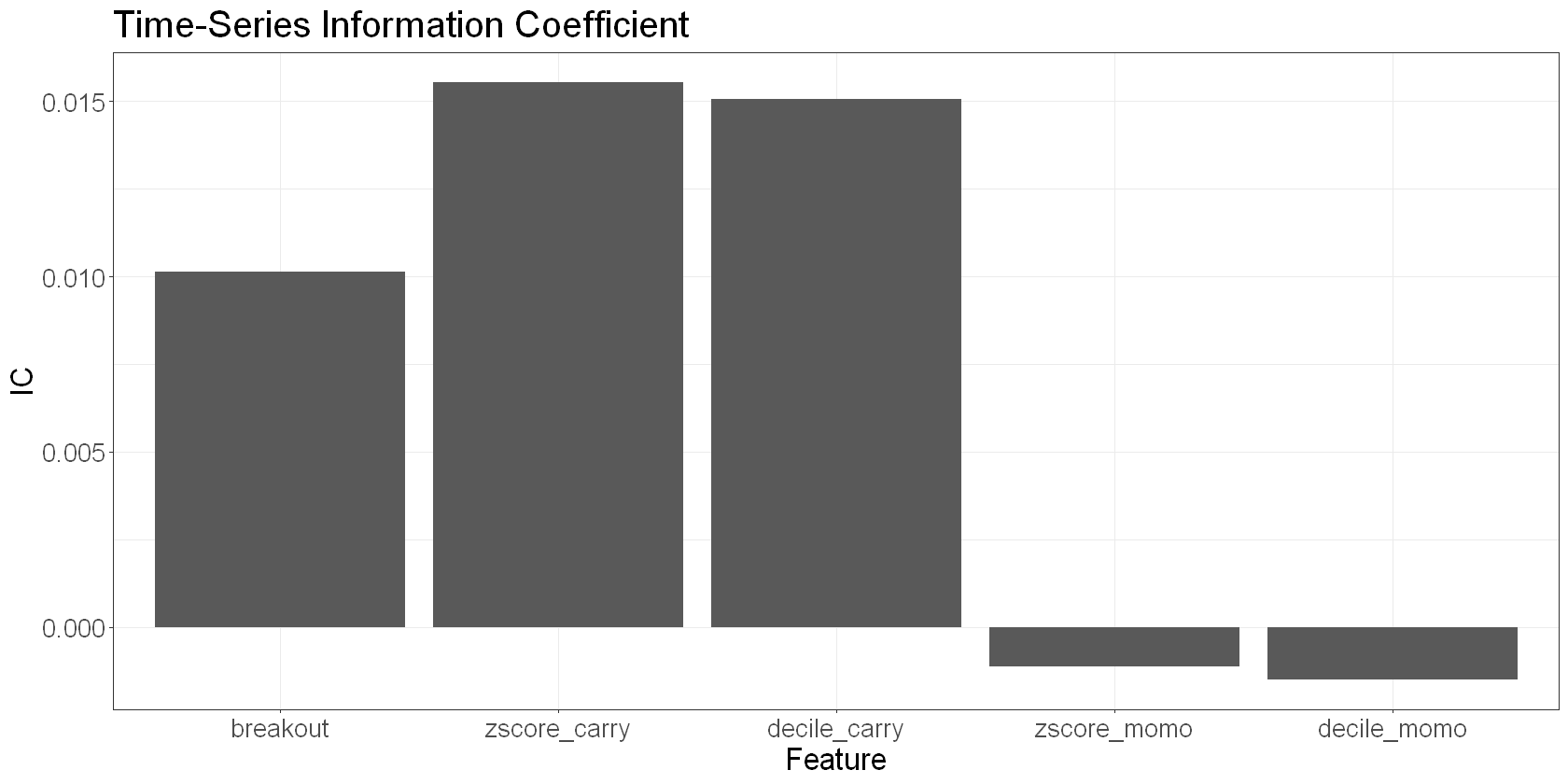

features_scaled %>%

pivot_longer(c(breakout, zscore_carry, zscore_momo, decile_carry, decile_momo), names_to = "feature") %>%

group_by(feature) %>%

summarize(IC = cor(value, total_fwd_return_simple)) %>%

ggplot(aes(x = factor(feature, levels = c('breakout', 'zscore_carry', 'decile_carry', 'zscore_momo', 'decile_momo')), y = IC)) +

geom_bar(stat = "identity") +

labs(

x = "Feature",

y = "IC",

title = "Time-Series Information Coefficient"

)

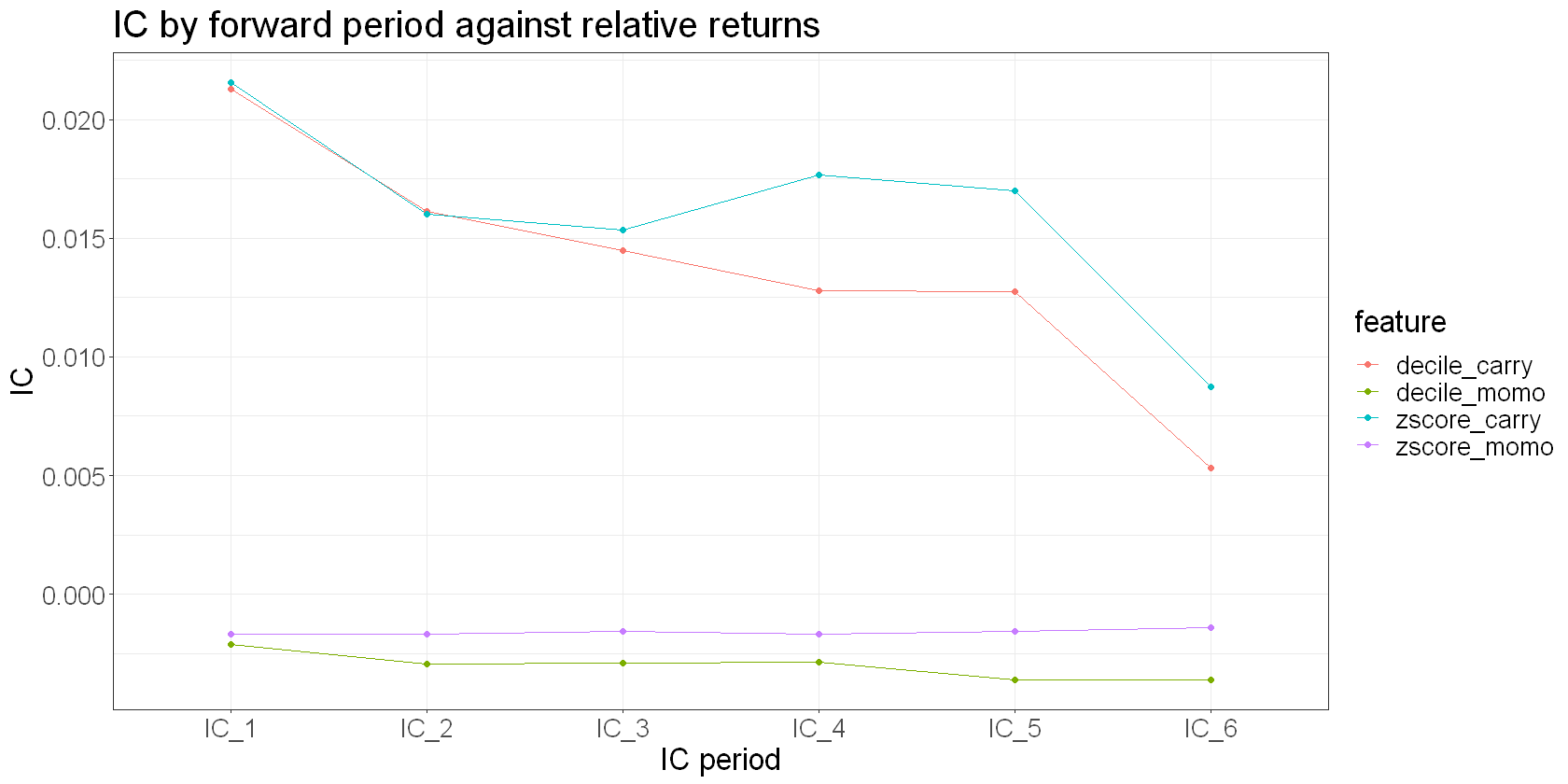

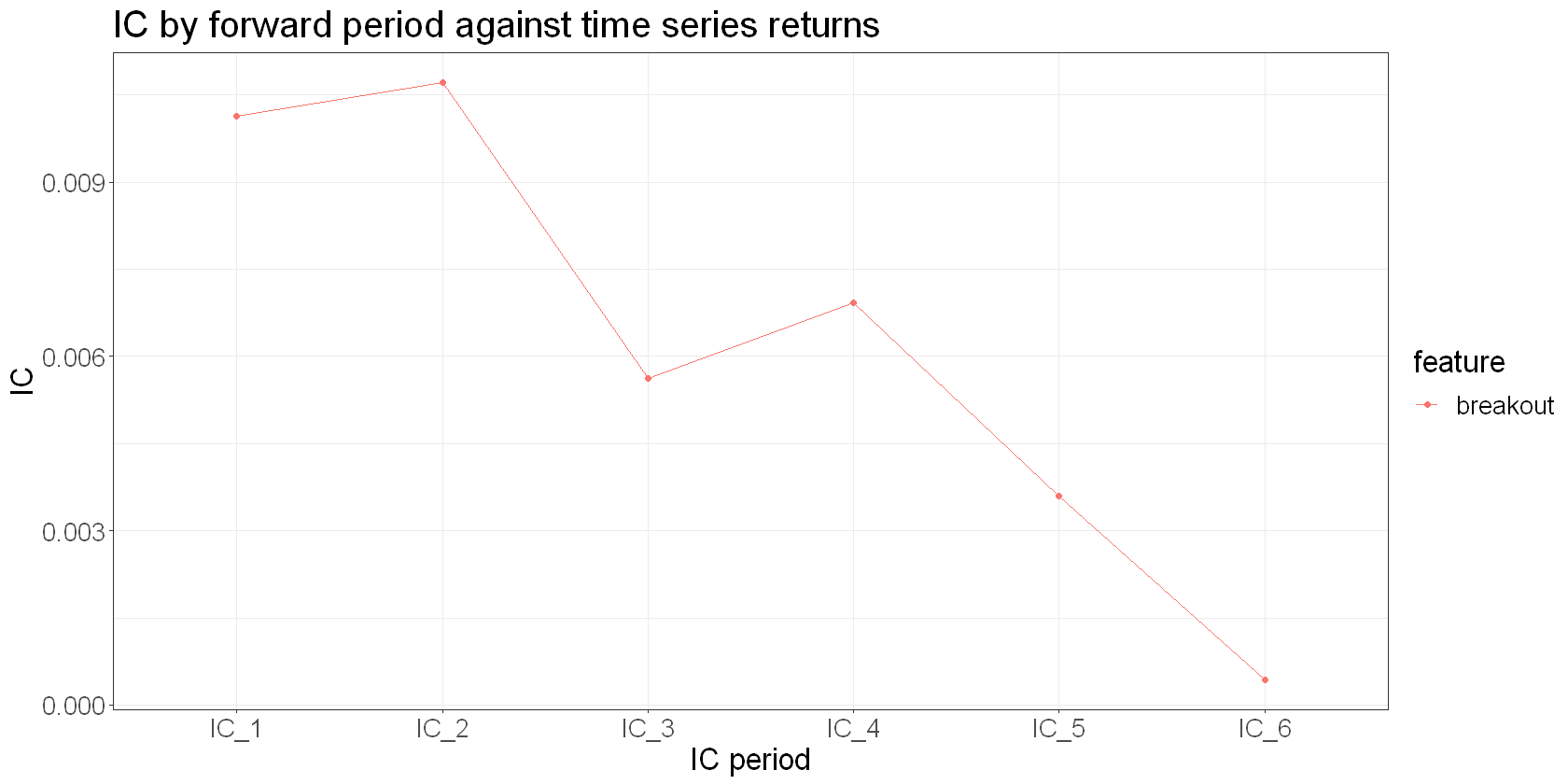

Decay characteristics#

Next, it’s useful to understand how quickly your signals decay. Signals that decay quickly need to be acted upon without delay, while those that decay slower are more forgiving to execute.

We’ll look at relative return IC for our cross-sectional features and the time-series return IC for our breakout feature.

features_scaled %>%

filter(is_universe) %>%

group_by(ticker) %>%

arrange(date) %>%

mutate(

demeaned_fwd_returns_2 = lead(demeaned_fwd_returns, 1),

demeaned_fwd_returns_3 = lead(demeaned_fwd_returns, 2),

demeaned_fwd_returns_4 = lead(demeaned_fwd_returns, 3),

demeaned_fwd_returns_5 = lead(demeaned_fwd_returns, 4),

demeaned_fwd_returns_6 = lead(demeaned_fwd_returns, 5),

) %>%

na.omit() %>%

ungroup() %>%

pivot_longer(c(zscore_carry, zscore_momo, decile_carry, decile_momo), names_to = "feature") %>%

group_by(feature) %>%

summarize(

IC_1 = cor(value, demeaned_fwd_returns),

IC_2 = cor(value, demeaned_fwd_returns_2),

IC_3 = cor(value, demeaned_fwd_returns_3),

IC_4 = cor(value, demeaned_fwd_returns_4),

IC_5 = cor(value, demeaned_fwd_returns_5),

IC_6 = cor(value, demeaned_fwd_returns_6),

) %>%

pivot_longer(-feature, names_to = "IC_period", values_to = "IC") %>%

ggplot(aes(x = factor(IC_period), y = IC, colour = feature, group = feature)) +

geom_line() +

geom_point() +

labs(

title = "IC by forward period against relative returns",

x = "IC period"

)

We see some potentially interesting things:

Our momentum decile feature shows only a slightly negative IC on day 1, but it decays quite slowly.

Our carry decile feature on the other hand has a significant and sticky IC.

features_scaled %>%

group_by(ticker) %>%

mutate(

fwd_returns_2 = lead(total_fwd_return_simple, 1),

fwd_returns_3 = lead(total_fwd_return_simple, 2),

fwd_returns_4 = lead(total_fwd_return_simple, 3),

fwd_returns_5 = lead(total_fwd_return_simple, 4),

fwd_returns_6 = lead(total_fwd_return_simple, 5),

) %>%

na.omit() %>%

ungroup() %>%

pivot_longer(c(breakout), names_to = "feature") %>%

group_by(feature) %>%

summarize(

IC_1 = cor(value, total_fwd_return_simple),

IC_2 = cor(value, fwd_returns_2),

IC_3 = cor(value, fwd_returns_3),

IC_4 = cor(value, fwd_returns_4),

IC_5 = cor(value, fwd_returns_5),

IC_6 = cor(value, fwd_returns_6),

) %>%

pivot_longer(-feature, names_to = "IC_period", values_to = "IC") %>%

ggplot(aes(x = factor(IC_period), y = IC, colour = feature, group = feature)) +

geom_line() +

geom_point() +

labs(

title = "IC by forward period against time series returns",

x = "IC period"

)

The breakout feature decays quite slowly too. It’s has a larger IC value than momentum, but less than carry.

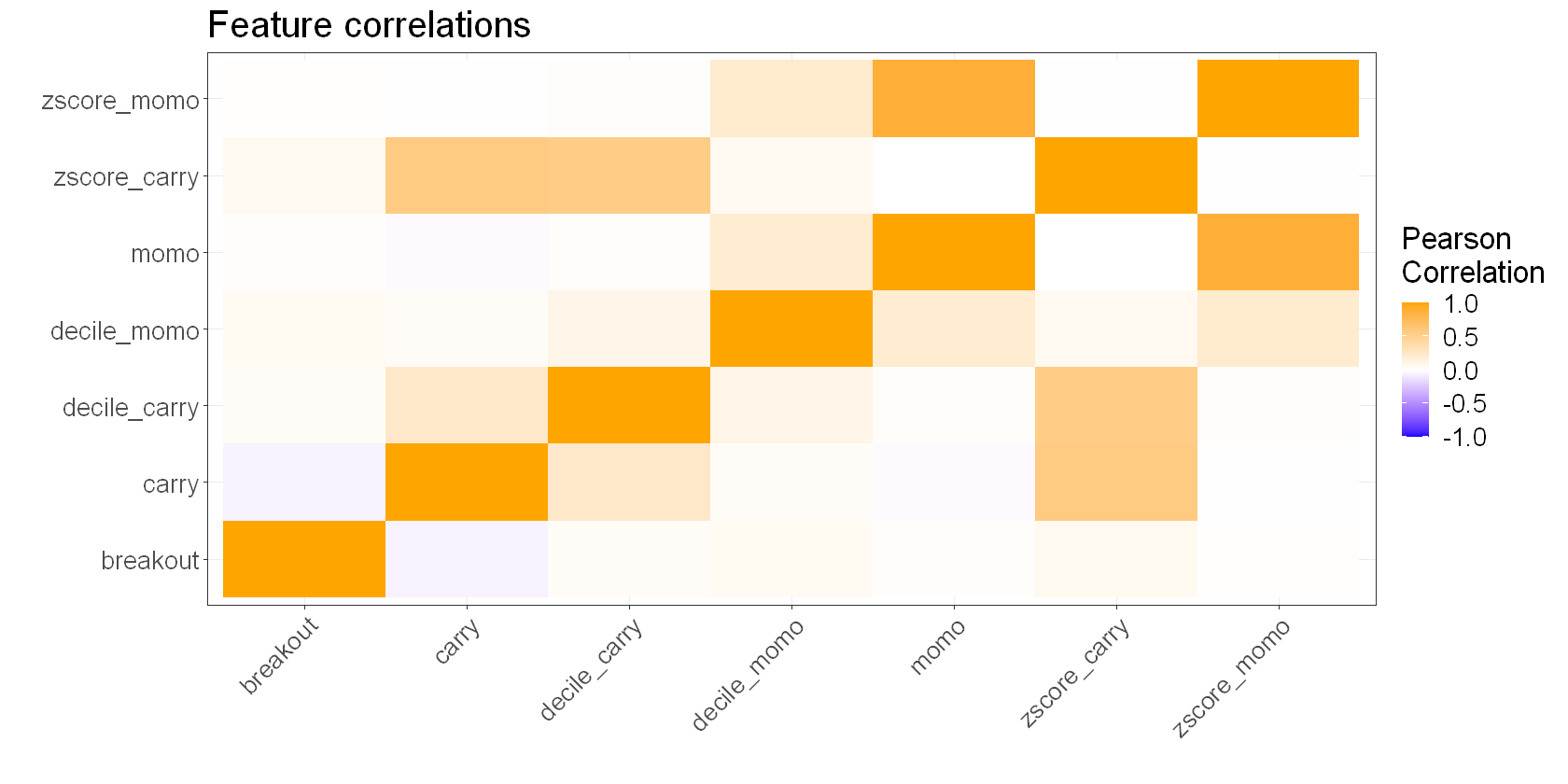

Feature correlation#

Next let’s look at the correlation between our features. Ideally, they’ll be uncorrelated or anti-correlated to provide a diversification effect.

features_scaled %>%

select('breakout', 'momo', 'carry', 'zscore_carry', 'decile_carry', 'zscore_momo', 'decile_momo') %>%

as.matrix() %>%

cor() %>%

as.data.frame() %>%

rownames_to_column("feature1") %>%

pivot_longer(-feature1, names_to = "feature2", values_to = "corr") %>%

ggplot(aes(x = feature1 , y = feature2, fill = corr)) +

geom_tile() +

scale_fill_gradient2(

low = "blue",

high = "orange",

mid = "white",

midpoint = 0,

limit = c(-1,1),

name="Pearson\nCorrelation"

) +

labs(

x = "",

y = "",

title = "Feature correlations"

) +

theme(axis.text.x = element_text(angle = 45, vjust = 1, size = 16, hjust = 1))

This isn’t a bad result. We see that our breakout and carry features are negatively correlated, and our momentum feature is almost uncorrelated with everything. That means that we should get some nice diversification from combining these signals.

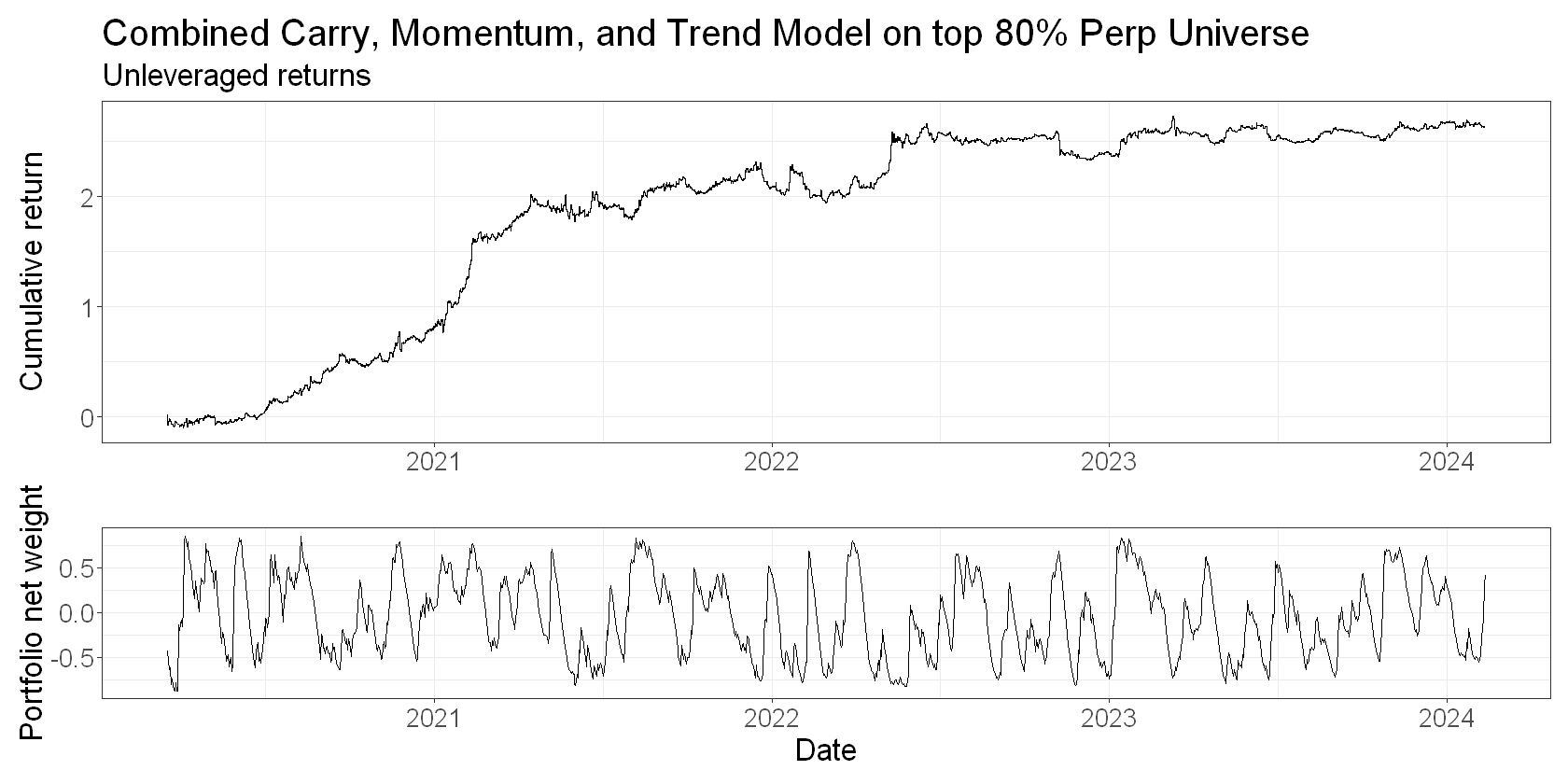

Combining our signals#

Based on what we’ve seen and what we understand about our signals, I think it makes sense to:

Overweight the

decile_carrysignal, as it has a high IC, decays slowly, and is anti-correlated with our other cross-sectional feature.Underweight the

decile_momosignal, as it has a small negative IC.Overweight the

breakoutsignal slightly. It has a moderate IC, decays slowly, and is uncorrelated with the other signals. Note that it also tilts the portfolio net long or short since it is used in the time series, not the cross-section.

You might want to weight these signals differently depending on your objectives and constraints, as well as what you understood from a more thorough analysis of each signal.

In any event, here’s a plot of the cumulative returns to our weighted signals over time.

I want to stress that this isn’t a backtest - it makes zero attempt to address the real-world issues such as costs, turnover, or universe restriction. It simply plots the returns to our combined features as if you could do so frictionlessly.

# start simulation from date we first have n tickers in the universe

min_trading_universe_size <- 10

start_date <- features %>%

group_by(date, is_universe) %>%

summarize(count = n(), .groups = "drop") %>%

filter(count >= min_trading_universe_size) %>%

head(1) %>%

pull(date)

model_df <- features %>%

filter(is_universe) %>%

filter(date >= start_date) %>%

group_by(date) %>%

mutate(

carry_decile = ntile(carry, 10),

carry_weight = (carry_decile - 5.5), # will run -4.5 to 4.5

momo_decile = ntile(momo, 10),

momo_weight = -(momo_decile - 5.5), # will run -4.5 to 4.5

breakout_weight = breakout / 2, # TODO: probably don't actually want to allow a short position based on the breakout feature

combined_weight = (0.5*carry_weight + 0.2*momo_weight + 0.3*breakout_weight),

# scale weights so that abs values sum to 1 - no leverage condition

scaled_weight = if_else(combined_weight == 0, 0, combined_weight/sum(abs(combined_weight)))

)

returns_plot <- model_df %>%

summarize(returns = scaled_weight * total_fwd_return_simple, .groups = "drop") %>%

mutate(logreturns = log(returns+1)) %>%

ggplot(aes(x=date, y=cumsum(logreturns))) +

geom_line() +

labs(

title = 'Combined Carry, Momentum, and Trend Model on top 80% Perp Universe',

subtitle = "Unleveraged returns",

x = "",

y = "Cumulative return"

)

weights_plot <- model_df %>%

group_by(date) %>%

summarise(total_weight = sum(scaled_weight)) %>%

ggplot(aes(x = date, y = total_weight)) +

geom_line() +

labs(x = "Date", y = "Portfolio net weight")

returns_plot / weights_plot + plot_layout(heights = c(2,1))

The portfolio net weight plot shows that the breakout feature tilts the portfolio net long or short.

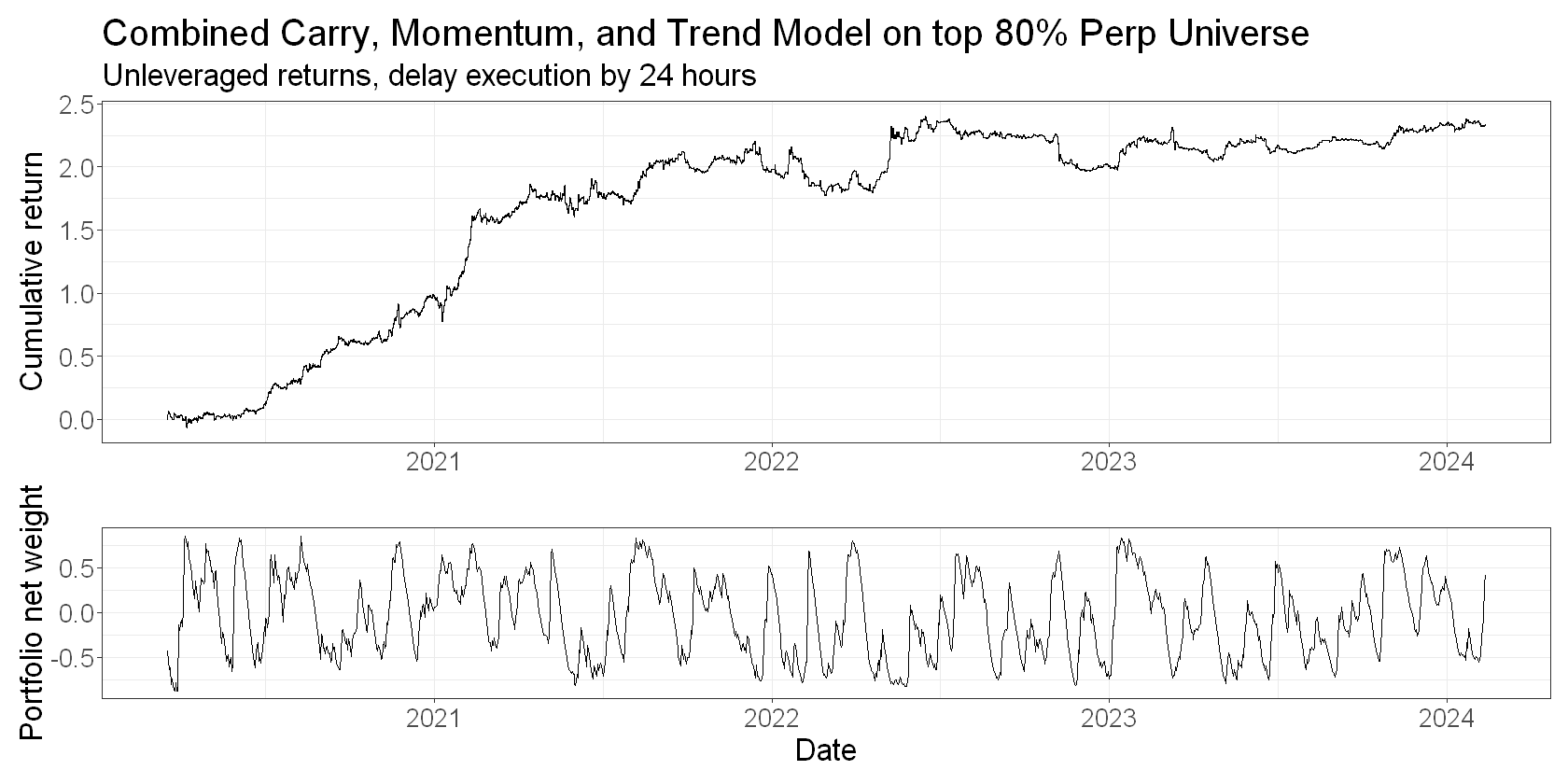

Note that because of the way we’ve aligned features and returns, the plot assumes you calculate the feature values at the daily close and act on them at that same price. While this is flattering, it’s not entirely inaccurate for a 24-7 market like crypto. For stocks, you’d definitely want to put another day’s gap between your feature and your forward return. This is illustrative for crypto too. Here’s what the returns would look like if we delayed acting on them for 24 hours:

returns_plot <- model_df %>%

summarize(returns = scaled_weight * total_fwd_return_simple_2, .groups = "drop") %>%

mutate(logreturns = log(returns+1)) %>%

ggplot(aes(x=date, y=cumsum(logreturns))) +

geom_line() +

labs(

title = 'Combined Carry, Momentum, and Trend Model on top 80% Perp Universe',

subtitle = "Unleveraged returns, delay execution by 24 hours",

x = "",

y = "Cumulative return"

)

weights_plot <- model_df %>%

group_by(date) %>%

summarise(total_weight = sum(scaled_weight)) %>%

ggplot(aes(x = date, y = total_weight)) +

geom_line() +

labs(x = "Date", y = "Portfolio net weight")

returns_plot / weights_plot + plot_layout(heights = c(2,1))

Conclusions#

In this article, we looked at ways to understand our signals, and to combine them using a weighting scheme derived from our understanding.

And let’s be honest - the work we did here to understand our signals was very shallow. There’s much more you can and should do in order to understand your signals. For example:

Scatterplots and time-series plots that don’t hide the variance

Stability of factor ICs over time

Stability of the signal itself and the implications for turnover

Impact of taking the tails only

etc

In the next article, we’ll tackle the questions of turnover and cost and use a heuristic approach to help us navigate these trade-offs. We’ll also be a little more careful with universe selection (we don’t want to be trading the top 80% of perpetual contracts).

In a future article, we’ll also explore how we can use mean-variance optimisation to achieve the same goal.